Incorrect SiteWorx Account Quotas¶

There are a few common causes for quotas being incorrect or not matching the expected total:

Quotas are not enabled on the server

Incorrect quota partition listed in NodeWorx

Files group-owned by the account outside of the homedir folder

InterWorx-specific cronjobs are not running

Contents

Quotas Are Not Enabled¶

Information on enabling quotas can be found here.

Log in to the server at the CLI as root, either via SSH or from the terminal

Check if quotas are enabled on the server. If the /home directory is located on root (‘/’), then use ‘/’, if the /home directory is located on its own partition, use /home

mount | grep ' / '

If quotas are disabled, the output will state ‘noquota’

[root@server ~]# mount | grep ' / ' /dev/mapper/centos-root on / type xfs (rw,relatime,attr2,inode64,noquota) [root@server ~]#

If quotas are enabled, the output will show ‘usrquota’ and ‘groupquota’

[root@server ~]# mount | grep ' / ' /dev/mapper/centos-root on / type xfs (rw,relatime,attr2,inode64,usrquota,grpquota) [root@server ~]#

Incorrect Quota Partition in NodeWorx¶

Quotas should be enabled on the partition where the /home directory is located. If /home is located under root (‘/’) , then quotas should be enabled on root (‘/’) . If there is a separate /home partition, then quotas should be enabled on /home, instead.

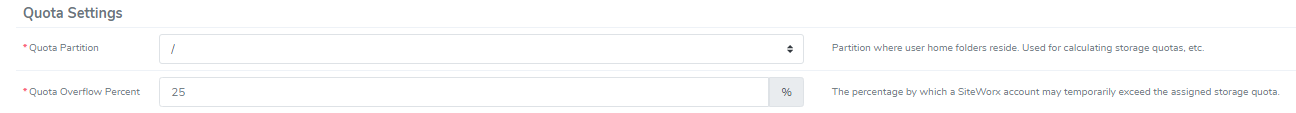

The partition that InterWorx should look at for quotas can be set from the Settings page in NodeWorx.

Log into NodeWorx from the browser (https://ip.ad.dr.ess:2443/nodeworx)

In NodeWorx, navigate to Server > Settings

At the bottom of the page, under Quota Settings, update the Quota Partition field with the correct partition–’/’, if /home is located under ‘/’, or /home, if /home is its own partition

Click Save

Files Group-owned By the Account Outside of the Homedir Folder¶

The storage quota information listed in SiteWorx is determined by calculating the total size of files group-owned by the

unixuser. As such, it is not uncommon, and is often expected, for the size of the account’s homedir folder

(/home/unixuser/domain.com/html) to differ from the quota information listed in SiteWorx. One of the most common

reason for the discrepancy is group-owned files located in /tmp, or backups listed in a directory other than

/home/unixuser/domain.com/iworx-backup. The find command tends to be the easiest way to locate group-owned files.

Log in to the server at the CLI as root, either via SSH or from the terminal

Run a

findcommand, searching a directory by group. The following will search a directory for files owned by a specific group user, replacing {directory} and {unixuser} with the corresponding information:find {directory} -type f -group {unixuser}

Example:

[root@server ~]# find / -type f -group examplec find: '/proc/25076/task/25076/fdinfo/5': No such file or directory find: '/proc/25076/fdinfo/6': No such file or directory /var/lib/mysql/examplec_wp161/db.opt /var/lib/mysql/examplec_wp161/wppl_commentmeta.frm /var/lib/mysql/examplec_wp161/wppl_commentmeta.ibd /var/lib/mysql/examplec_wp161/wppl_comments.frm /var/lib/mysql/examplec_wp161/wppl_comments.ibd /var/lib/mysql/examplec_wp161/wppl_links.frm /var/lib/mysql/examplec_wp161/wppl_links.ibd /var/lib/mysql/examplec_wp161/wppl_options.frm [TRUNCATED]

The following command can be used to easily calculate the total of all files owned by the group in a directory, replacing {directory} and {unixuser} with the corresponding information:

find {directory} -type f -group {unixuser} -print0 | du -hc --files0-from=- | tail -n 1

Example:

[root@server ~]# find / -type f -group examplec -print0 | du -hc --files0-from=- | tail -n 1 find: '/proc/24617/task/24617/fdinfo/5': No such file or directory find: '/proc/24617/fdinfo/6': No such file or directory 57M total [root@server ~]#

Stuck InterWorx Cronjobs¶

Storage and bandwidth quota calculations run as part of the InterWorx cronjobs.

By default, these calculations run every five minutes as part of the Fively cron, however, this can be customized

in ~iworx/iworx.ini.

If the InterWorx crons are stuck or not running, SiteWorx storage and bandwidth quotas may be inaccurate. Reasons why InterWorx cronjobs may not be running include:

A stuck cronjob process

The cronjob failed with an error

Lack of free space or memory on the server

Cron.d is not running or needs to be restarted

To Check the Last Time a Cron Successfully Ran¶

Log in to the server at the CLI as root, either via SSH or from the terminal

In the

~iworx/vardirectory, there are files namedlast_{cron name}, where{cron name}refers to each InterWorx cron. These files show the last time each cron ran, successfully. If these files do not match the expected dates and times, that means the corresponding cron is either stuck or there is an error keeping it from running correctly.For instance,

last_fivelyrefers to the Fively cron, so the timestamp on that file should be within the last give minutes.last_dailyrefers to the Daily cron, so the timestamp on that file should be within the last 24 hours, etc.These files can be easily viewed with the following:

ls -la ~iworx/var/last_*

Example:

[root@server ~]# ls -la ~iworx/var/last_* -rw------- 1 root root 0 Feb 16 11:35 /home/interworx/var/last_daily -rw------- 1 root root 0 Feb 16 12:06 /home/interworx/var/last_fifteenly -rw------- 1 root root 0 Feb 16 12:12 /home/interworx/var/last_fively -rw------- 1 root root 0 Feb 16 12:10 /home/interworx/var/last_hourly -rw------- 1 root root 0 Feb 16 00:00 /home/interworx/var/last_midnight -rw------- 1 root root 0 Feb 16 06:49 /home/interworx/var/last_quad_daily -rw------- 1 root root 0 Feb 11 16:50 /home/interworx/var/last_weekly [root@server ~]#

Stuck Cronjob Processes¶

Log in to the server at the CLI as root, either via SSH or from the terminal

Use the

ps auxfcommand to look for long running cron processes. This can be simplified by usinggrepisolate a specific pattern. For example, this is searching for all current processes with “fively” in the path:[root@server ~]# ps auxf | grep fively iworx 21677 0.0 0.0 113292 236 ? SNs 2021 0:00 \_ /bin/bash -c cd /home/interworx/cron ; ./iworx.pex --fively root 21681 0.0 0.0 330124 2528 ? SN 2021 0:01 \_ /home/interworx/bin/php -c /home/interworx/etc iworx.php --fively root 32999 0.0 0.0 112796 1680 pts/0 SN+ 15:51 0:00 \_ grep --color=auto fively [root@server ~]#

Kill the stuck processes using the

killcommand. More detailed information on managing and killing processes can be found here[root@server ~]# kill -9 21677 [root@server ~]# ps auxf | grep fively root 32999 0.0 0.0 112796 1680 pts/0 SN+ 15:51 0:00 \_ grep --color=auto fively [root@server ~]#

To Check for Errors in the InterWorx Cron Log¶

Logging related to InterWorx cronjobs are found in ~iworx/var/log/cron.log, or one of the rotated

cron.log-{date} files located in ~iworx/var/log, where {date} corresponds with the date the log was rotated.

While these logs can be viewed in NodeWorx,

it is always recommended to view them via a text editor from the CLI, as it allows for easier navigation and search functions.

Insufficient Space or Memory¶

InterWorx cronjobs will fail if there is not enough space or memory on the server for them to properly run or

complete. One indication this may be the case is if logging in ~iworx/var/log/cron.log shows that the cronjobs

started and then immediately ended, without any other logging. For example:

23-02-09 16:46:01.39957 [nosess-2pca-vcyx-CLI] [NOTICE]: iworx.php --fifteenly : iworx.php

2023-02-09 16:46:01.40084 [nosess-2pca-vcyx-CLI] [INFO] : script end : iworx.php

2023-02-09 16:48:01.54442 [nosess-6wrl-epjv-CLI] [INFO] : script begin : iworx.php

2023-02-09 16:48:01.54501 [nosess-6wrl-epjv-CLI] [NOTICE]: iworx.php --fively : iworx.php

2023-02-09 16:48:01.54634 [nosess-6wrl-epjv-CLI] [INFO] : script end : iworx.php

2023-02-09 16:53:01.15921 [nosess-er2u-fes8-CLI] [INFO] : script begin : iworx.php

2023-02-09 16:53:01.15986 [nosess-er2u-fes8-CLI] [NOTICE]: iworx.php --fively : iworx.php

2023-02-09 16:53:01.16127 [nosess-er2u-fes8-CLI] [INFO] : script end : iworx.php

2023-02-09 16:58:01.57041 [nosess-kvga-yuf5-CLI] [INFO] : script begin : iworx.php

2023-02-09 16:58:01.57103 [nosess-kvga-yuf5-CLI] [NOTICE]: iworx.php --fively : iworx.php

2023-02-09 16:58:01.57239 [nosess-kvga-yuf5-CLI] [INFO] : script end : iworx.php

2023-02-09 17:01:01.37188 [nosess-uuy1-ftld-CLI] [INFO] : script begin : iworx.php

2023-02-09 17:01:01.37245 [nosess-uuy1-ftld-CLI] [NOTICE]: iworx.php --fifteenly : iworx.php

2023-02-09 17:01:01.37391 [nosess-uuy1-ftld-CLI] [INFO] : script end : iworx.php

2023-02-09 17:03:01.53289 [nosess-ubti-6n0b-CLI] [INFO] : script begin : iworx.php

2023-02-09 17:03:01.53344 [nosess-ubti-6n0b-CLI] [NOTICE]: iworx.php --fively : iworx.php

2023-02-09 17:03:01.53483 [nosess-ubti-6n0b-CLI] [INFO] : script end : iworx.php

Another indication is if running the cron manually at the CLI finishes instantly. It is expected for the command

to hang for a short period of time while the cron runs. InterWorx crons can be manually run at the CLI with the

following command, replacing {cron} with the specific cronjob. For example, fively, daily, quad_daily, etc.

~iworx/cron/iworx.pex --{cron}

Log in to the server at the CLI as root, either via SSH or from the terminal

To check current memory usage, use the command

free -m. The results are in megabytes. In the following example, there is both not enough free memory for the crons to successfully run, and also the server does not meet the minium requirements for InterWorx, as there is no SWAP, which also directly contributes to the issue:[root@server ~]# free -m total used free shared buff/cache available Mem: 1998 1732 85 33 180 95 Swap: 0 0 0 [root@server ~]#

To check the current storage usage, use the command

df -h. In the following example, the ‘/’ partition is 100% full, which is causing the cronjobs to fail:[root@server ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 1.8G 0 1.8G 0% /dev tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs 1.9G 193M 1.7G 11% /run tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup /dev/nvme0n1p1 10G 9.7G 0.3G 100% / tmpfs 373M 0 373M 0% /run/user/250 tmpfs 373M 0 373M 0% /run/user/0 [root@server ~]#

Cron.d is Not Running or Needs to be Restarted¶

If the cron.d service is not running or is stuck in some manner, that will affect crons running, correctly.

Cron.d service logging can be found at /var/log/cron or a rotated cron-{date} log, also found in /var/log,

where {date} corresponds with the date the log was rotated.

Log in to the server at the CLI as root, either via SSH or from the terminal

Check for the status of the cron.d service using

systemctl. In the following example, the cron.d service is not running:[root@server ~]# systemctl status crond ● crond.service - Command Scheduler Loaded: loaded (/usr/lib/systemd/system/crond.service; enabled; vendor preset: enabled) Active: inactive (dead) since Thu 2023-02-16 14:51:42 EST; 9s ago Process: 19085 ExecStart=/usr/sbin/crond -n $CRONDARGS (code=exited, status=0/SUCCESS) Main PID: 19085 (code=exited, status=0/SUCCESS) Feb 09 18:02:01 server crond[19085]: (CRON) INFO (RANDOM_DELAY will be scaled with factor 63% if used.) Feb 09 18:02:01 server crond[19085]: (examplec) ORPHAN (no passwd entry) Feb 09 18:02:01 server crond[19085]: (CRON) INFO (running with inotify support) Feb 09 18:02:01 server crond[19085]: (CRON) INFO (@reboot jobs will be run at computer's startup.) Feb 10 03:37:01 server crond[19085]: (examplec) ORPHAN (no passwd entry) Feb 10 11:21:01 server crond[19085]: (examplec) ORPHAN (no passwd entry) Feb 10 14:12:01 server crond[19085]: (examplec) RELOAD (/var/spool/cron/examplec) Feb 16 14:51:42 server crond[19085]: (CRON) INFO (Shutting down) Feb 16 14:51:42 server systemd[1]: Stopping Command Scheduler... Feb 16 14:51:42 server systemd[1]: Stopped Command Scheduler. [root@server7 ~]#

Start or restart the cron.d service using

systemctlsystemctl start crond

or

systemctl restart crond